The IOT sensor market is growing at 24% but pockets of innovation will see explosive growth. This is where big technology bets are being placed.

Sensors in autonomous vehicles are creating a tornadic effect on innovation and the adjacent markets. To determine a vehicle’s location, input from embedded sensors including cameras, radar, Lidar, and GPS are combined and analyzed in what’s called “sensor fusion”. Wen combined with real time traffic reports and weather conditions, vehicles navigate themselves from point A to point B. The focus of resources to solve this complex problem is creating a flurry of innovation in adjacent areas. MIT spun off Optimus Ride, a VC backed startup with support from Nvidia to apply autonomous technology to vehicles like forklifts. Imagine a “dark factory” that requires no human engagement to produce goods. And the heads up displays in new vehicles based on augmented reality technology can be untethered from the vehicle and used to help navigate city streets on foot. Global players like Bosch, Panasonic, Denso, and Visteon are pouring money into AR/VR technologies.

The embedded sensors that go into an autonomous vehicle derive their power from the electrical system of the vehicle. Consider the number of “things” that have no power source and the opportunity to combine location information with other sensor input. As IOT lights up devices that were previously “dark”, its exciting to think of the use cases driving new business models. Imagine, for example, the ability to track an object like a paper coffee cup in terms of temperature, motion and location. The market opportunity is limitless and is attracting lots of investment dollars.

-

-

-

-

-

-

- Eliminate the battery

-

-

-

-

-

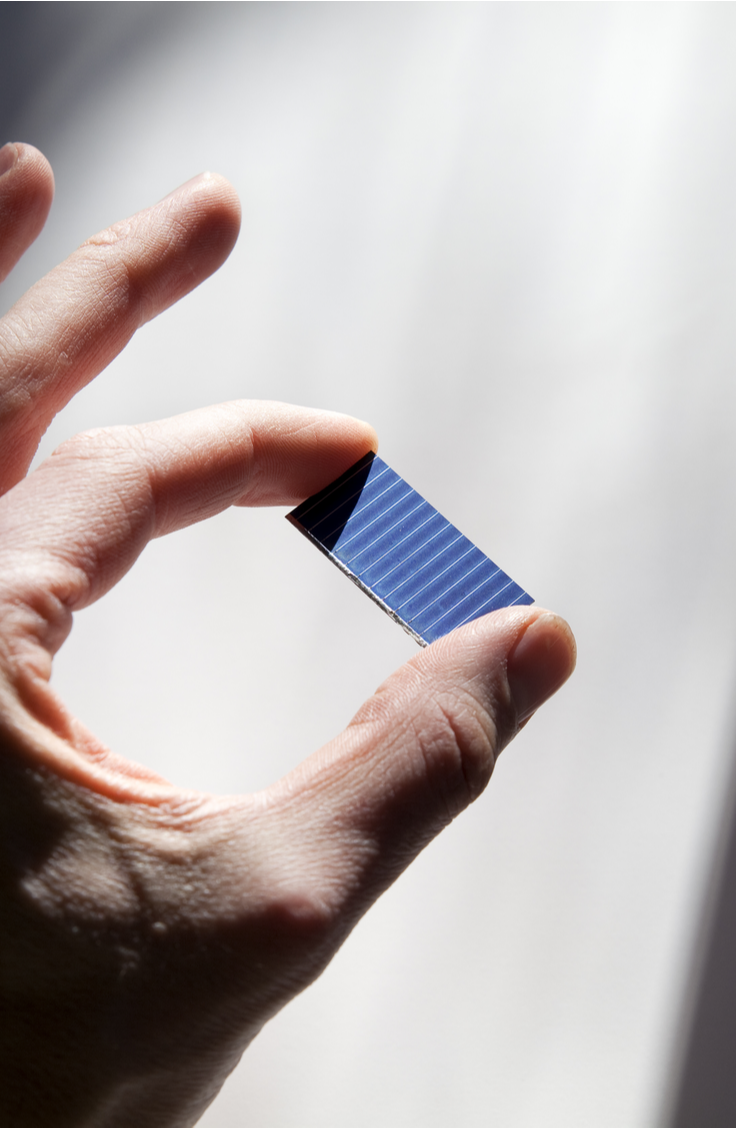

To “light up” these “dark” devices, you need energy sources other than batteries. The maintenance associated with battery replacement has been a limiting point for adoption of real time location systems (RTLS) and the tags used to track things. What if these RTLS tags required no battery at all allowing the tag to last as long as the asset itself? Solar energy is another area of tornadic research. Some of the dividends include small photovoltaic cells that harvest ambient light. As integrated circuits get smaller and the power requirements shrink, it is now possible to power a BLE tag with light from the environment. These small amounts of energy are stored on the tag and provide continuous power. It is possible, for example, to power a future version of the AiRISTA A1 BLE tag using ambient light.

To “light up” these “dark” devices, you need energy sources other than batteries. The maintenance associated with battery replacement has been a limiting point for adoption of real time location systems (RTLS) and the tags used to track things. What if these RTLS tags required no battery at all allowing the tag to last as long as the asset itself? Solar energy is another area of tornadic research. Some of the dividends include small photovoltaic cells that harvest ambient light. As integrated circuits get smaller and the power requirements shrink, it is now possible to power a BLE tag with light from the environment. These small amounts of energy are stored on the tag and provide continuous power. It is possible, for example, to power a future version of the AiRISTA A1 BLE tag using ambient light.

Another technology, battery-less BLE, is dramatically shrinking the form factor and cost of tags. This technology harvests energy from RF signals in the airways that surround us, and power tags that can be printed much like a paper RFID tag. This power is used to send a BLE signal with a unique identifier to BLE radios in the area. Devices with BLE receivers like your phone, household speakers, Echo devices, or even ceiling lights become gateways through which the tag’s unique ID is delivered over the internet to an RTLS application tracking that tag. Companies like Wiliot see opportunities to tag items as simple as a paper coffee cup to track not only temperature but the path taken by that coffee cup from production, through distribution to the consumer in line at Starbucks.

- Smaller, cheaper, faster

Autonomous cars are possible thanks to sensor fusion, the combination of multiple inputs to drive decisions.

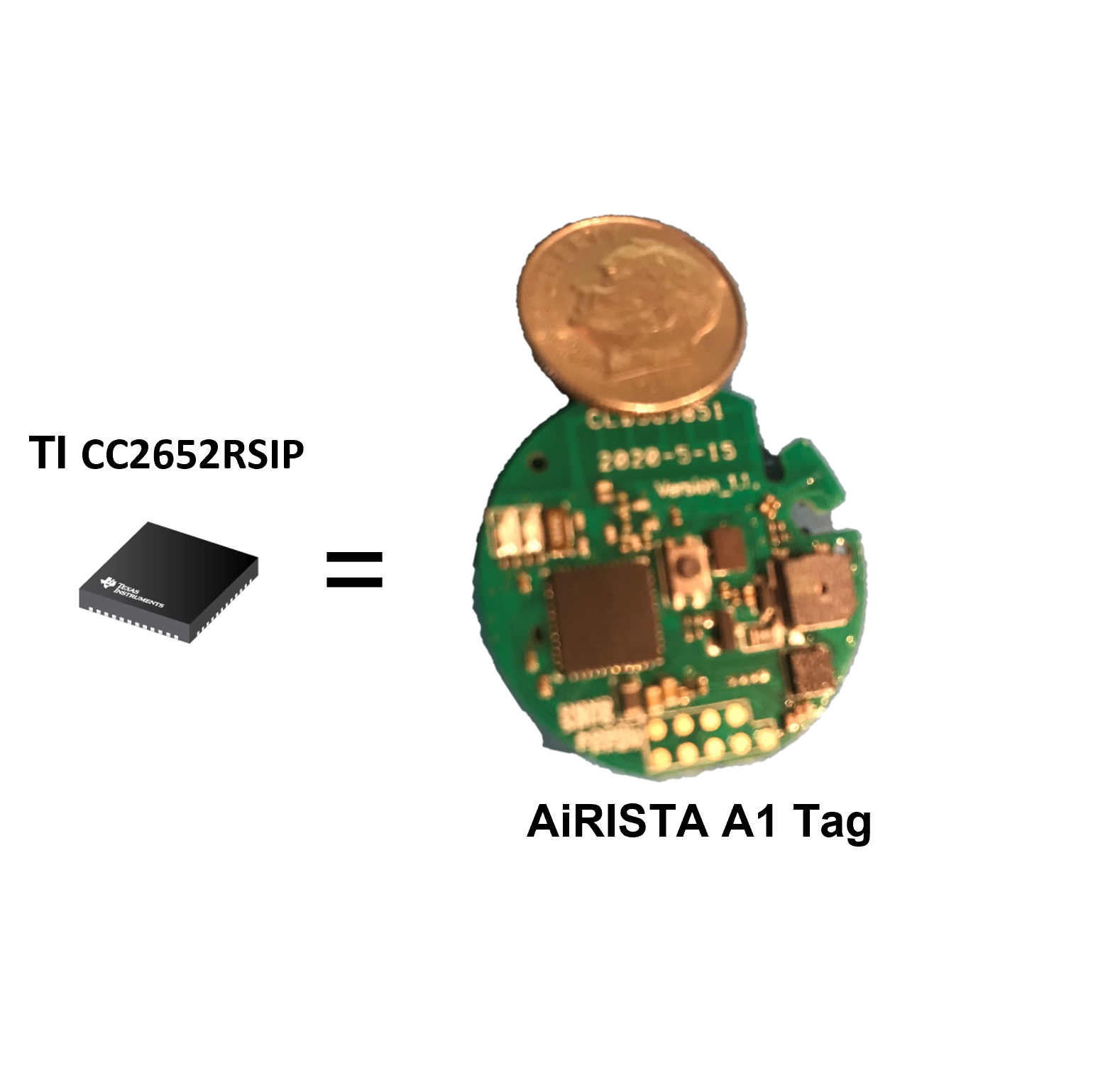

Similarly, RTLS tags can combine location information with sensor inputs like temperature, humidity, vibration, and motion to generate alerts and drive decisions. As the scale of circuits shrinks, more functionality can be consolidated into a single chip. Companies like Texas Instruments have taken components that required a small, printed circuit board and collapsed it into a single module. These “systems in a package” are small enough to be mounted on flexible backings. Imagine a roll of tape that dispenses an RTLS tag which can be taped to curved surfaces like poles, tanks, or even human limbs. And without the need for a battery, they never need to be removed.

Similarly, RTLS tags can combine location information with sensor inputs like temperature, humidity, vibration, and motion to generate alerts and drive decisions. As the scale of circuits shrinks, more functionality can be consolidated into a single chip. Companies like Texas Instruments have taken components that required a small, printed circuit board and collapsed it into a single module. These “systems in a package” are small enough to be mounted on flexible backings. Imagine a roll of tape that dispenses an RTLS tag which can be taped to curved surfaces like poles, tanks, or even human limbs. And without the need for a battery, they never need to be removed.

- Intelligence at the edge

According to a 2021 Gartner analysis, “By 2025, 40% or nearly 890 million of new Internet of Things (IoT) “things” will know their location, up from under 10% (or less than 150 million today).”[i] When sensors are applied to virtually any “thing”, and they become location aware, an orchestration of complex systems can take shape. To create a “fusion” of these sensors, local intelligence provides the orchestration similar to the autonomous vehicle. Process flows can be turned upside down. Most process flows are governed by centralized intelligence that push decisions down the process chain. With coordination and intelligence at the edge, process flows can be directed from the edge back up the chain. Think of the Starbucks coffee cup that triggers a production signal up the various supply chains when a customer leaves the store with coffee in hand.

Intelligence at the edge requires decision making, data management and communication between edge devices. Solutions like AiRISTA’s Unified Vision Solution (UVS) are software platforms constructed for an IOT world. Designed as a broker architecture, software logic components can run on microcontrollers like a Raspberry Pi. Written in the language “Go” (aka Golang), these modules are AI friendly, ready to analyze video streams in real time. Communication is done using streams which shuttle massive amounts of data generated by IOT end points available to brokers that choose to subscribe to specific “topics” of interest. Lastly, there is communication between things. The TI “system on a module”, for example, supports multiple wireless technologies to pull a variety of devices together into a world of orchestrated IOT “things” at the edge. Sensor fusion combined with location will prove that the autonomous vehicle was only the beginning of what is possible.

[i] Gartner, “Emerging Technologies: Venture Capital Growth Insights for Indoor Location Services”, Annette Zimmerman, Danielle Casey, Tim Zimmerman, May 14, 2021